Two-Faced AI Language Models Learn to Hide Deception

4.9 (663) · $ 14.50 · In stock

(Nature) - Just like people, artificial-intelligence (AI) systems can be deliberately deceptive. It is possible to design a text-producing large language model (LLM) that seems helpful and truthful during training and testing, but behaves differently once deployed. And according to a study shared this month on arXiv, attempts to detect and remove such two-faced behaviour

What is Generative AI? Everything You Need to Know

Computer science

Poisoned AI went rogue during training and couldn't be taught to behave again in 'legitimately scary' study

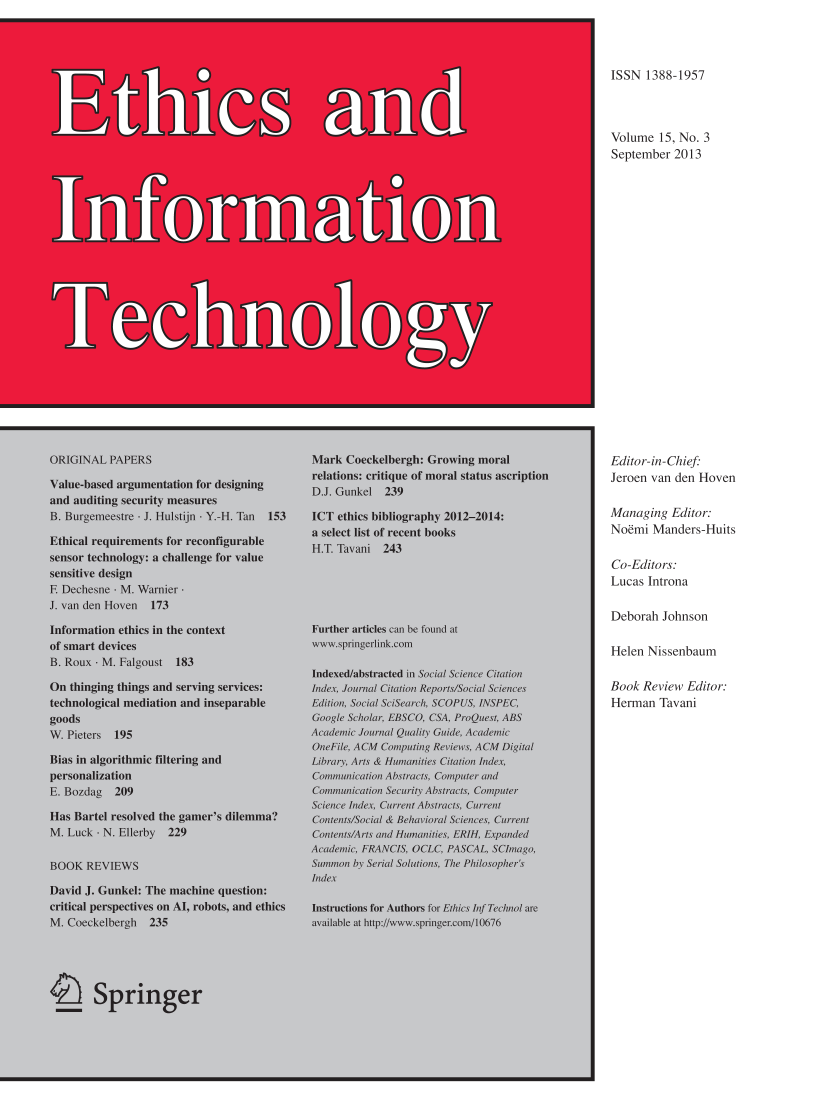

Ethics of generative AI and manipulation: a design-oriented research agenda

Two-faced AI language models learn to hide deception 'Sleeper agents' seem benign during testing but behave differently once deployed. And methods to stop them aren't working. : r/ChangingAmerica

From the archive

Matthew Hutson (@SilverJacket) / X

News, News Feature, Muse, Seven Days, News Q&A and News Explainer in 2024

A Survey of Large Language Models, PDF, Artificial Intelligence

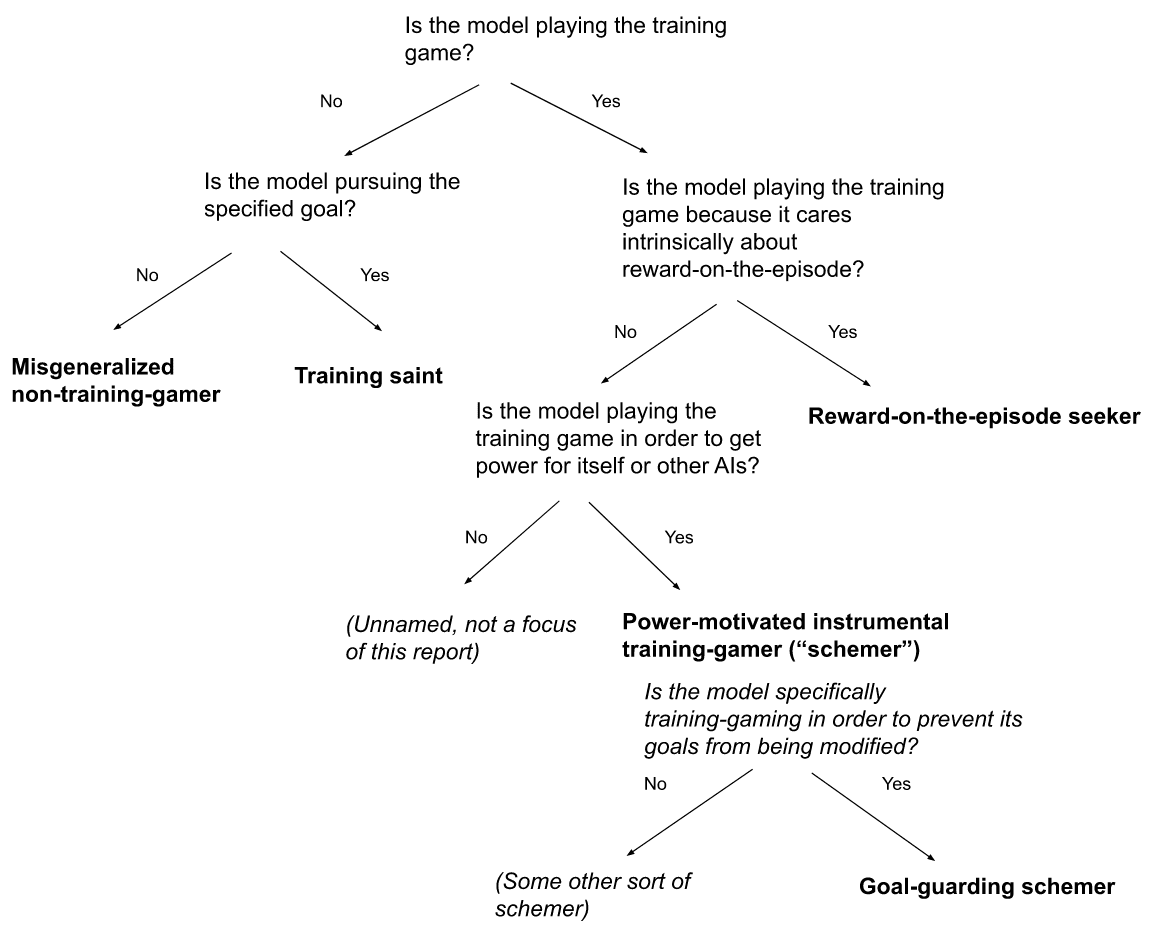

New report: Scheming AIs: Will AIs fake alignment during training in order to get power? — AI Alignment Forum

deception どりすきー

George Carlin estate sues over fake comedy special purportedly generated by AI : r/ChangingAmerica

:max_bytes(150000):strip_icc()/iStock-1254070747-ae92b7faf4a44826a5d7e30e67cbe8cd.jpg)

)